First of all, Pearl Street Mall is just as lovely as I remember, but OMG it is so crowded, with so many new stores and chains. Still, good food, good views, hot weather, lovely walk.

Welcome to Day 2! http://neondataskills.org/data-institute-17/day2/

Our morning session focused on reproducibility and workflows with the great Naupaka Zimmerman. Remember the characteristics of reproducibility - organization, automation, documentation, and dissemination. We focused on organization, and spent an enjoyable hour sorting through an example messy directory of misc data files and code. The directory looked a bit like many of my directories. Lesson learned. We then moved to working with new data and git to reinforce yesterday's lessons. Git was super confusing to me 2 weeks ago, but now I think I love it. We also went back and forth between Jupyter and python stand alone scripts, and abstracted variables, and lo and behold I got my script to run.

The afternoon focused on Lidar (yay!) and prior to coding we talked about discrete and waveform data and collection, and the opentopography (http://www.opentopography.org/) project with Benjamin Gross. The opentopography talk was really interesting. They are not just a data distributor any more, they also provide a HPC framework (mostly TauDEM for now) on their servers at SDSC (http://www.sdsc.edu/). They are going to roll out a user-initiated HPC functionality soon, so stay tuned for their new "pluggable assets" program. This is well worth checking into. We also spent some time live coding with Python with Bridget Hass working with a CHM from the SERC site in California, and had a nerve-wracking code challenge to wrap up the day.

Fun additional take-home messages/resources:

- ISO International standard for dates = YYYY-MM-DD

- Missing values in R = NA, in Python = -9999

- For cleaning messy data - check out OpenRefine - a FOS tool for cleaning messy data http://openrefine.org/

- Excel is cray-cray, best practices for spreadsheets: http://www.datacarpentry.org/spreadsheet-ecology-lesson/

- Morpho (from DataOne) to enter metadata: https://www.dataone.org/software-tools/morpho

- Pay attention to file size with your git repositories - check out: https://git-lfs.github.com/. Git is good for things you do with your hands (like code), not for large data.

- Funny how many food metaphors are used in tech teaching: APIs as a menu in a restaurant; git add vs git commit as a grocery cart before and after purchase; finding GIS data is sometimes like shopping for ingredients in a specialty grocery store (that one is mine)...

- Markdown renderer: http://dillinger.io/

- MIT License, like Creative Commons for code: https://opensource.org/licenses/MIT

- "Jupyter" means it runs with Julia, Python & R, who knew?

- There is a new project called "Feather" that allows compatibility between python and R: https://blog.rstudio.org/2016/03/29/feather/

- All the NEON airborne data can be found here: http://www.neonscience.org/data/airborne-data

- Information on the TIFF specification and TIFF tags here: http://awaresystems.be/, however their TIFF Tag Viewer is only for windows.

Thanks for everyone today! Megan Jones (our fearless leader), Naupaka Zimmerman (Reproducibility), Tristan Goulden (Discrete Lidar), Keith Krause (Waveform Lidar), Benjamin Gross (OpenTopography), Bridget Hass (coding lidar products).

Our home for the week

I left Boulder 20 years ago on a wing and a prayer with a PhD in hand, overwhelmed with bittersweet emotions. I was sad to leave such a beautiful city, nervous about what was to come, but excited to start something new in North Carolina. My future was uncertain, and as I took off from DIA that final time I basically had Tom Petty's Free Fallin' and Learning to Fly on repeat on my walkman. Now I am back, and summer in Boulder is just as breathtaking as I remember it: clear blue skies, the stunning flatirons making a play at outshining the snow-dusted Rockies behind them, and crisp fragrant mountain breezes acting as my Madeleine. I'm back to visit the National Ecological Observatory Network (NEON) headquarters and attend their 2017 Data Institute, and re-invest in my skillset for open reproducible workflows in remote sensing.

Day 1 Wrap Up from the NEON Data Institute 2017

What a day! http://neondataskills.org/data-institute-17/day1/

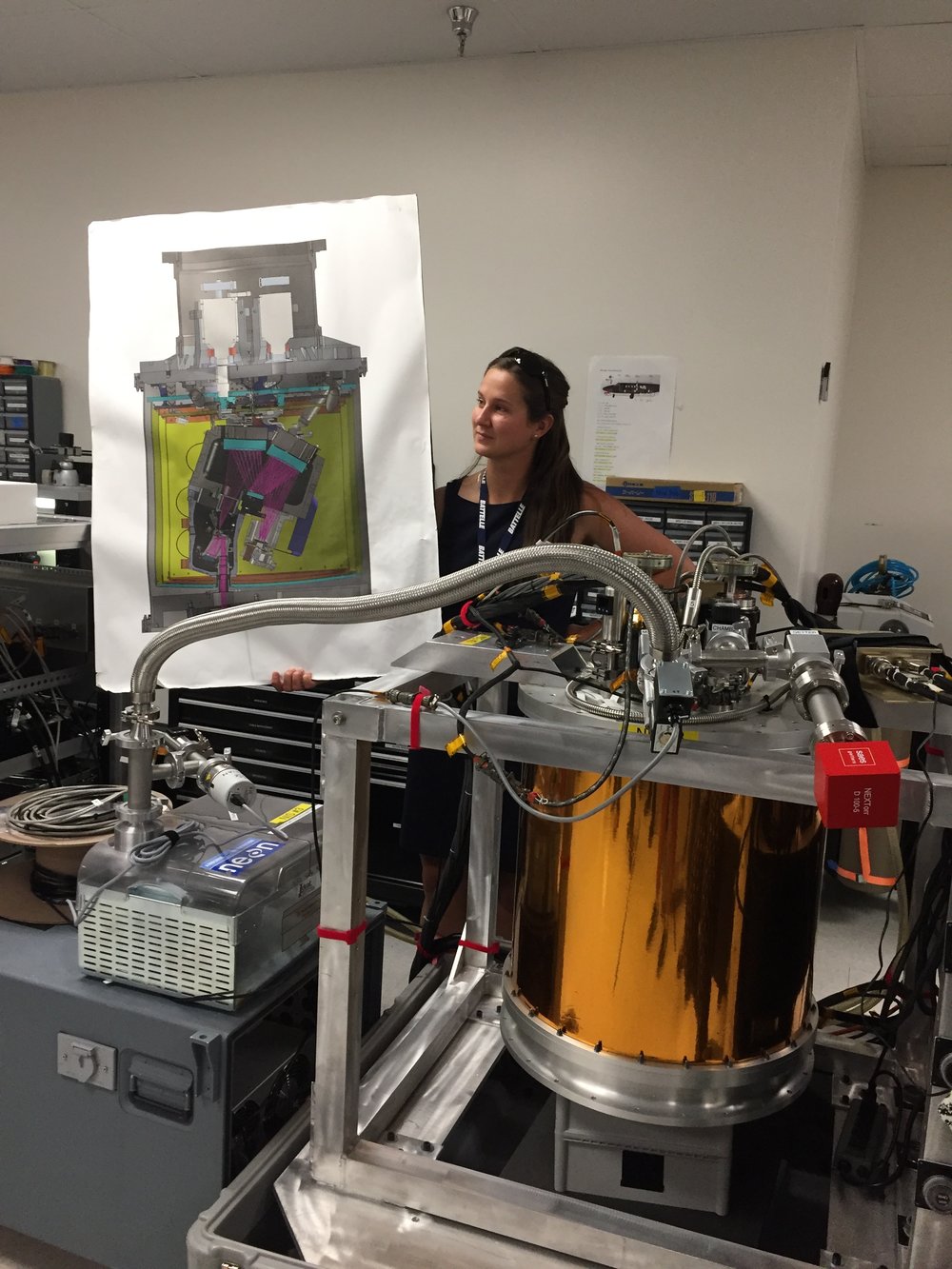

Attendees (about 30) included graduate students, old dogs (new tricks!) like me, and research scientists interested in developing reproducible workflows into their work. We are a mix of ages and genders. The morning session focused on learning about the NEON program (http://www.neonscience.org/): its purpose, sites, sensors, data, and protocols. NEON, funded by NSF and managed by Battelle, was conceived in 2004 and will go online for a 30-year mission providing free and open data on the drivers of and responses to ecological change starting in Jan 2018. NEON data comes from IS (instrumented systems), OS (observation systems), and RS (remote sensing). We focused on the Airborne Observation Platform (AOP) which uses 2, soon to be 3 aircraft, each with a payload of a hyperspectral sensor (from JPL, 426, 5nm bands (380-2510 nm), 1 mRad IFOV, 1 m res at 1000m AGL) and lidar (Optech and soon to be Riegl, discrete and waveform) sensors and a RGB camera (PhaseOne D8900). These sensors produce co-registered raw data, are processed at NEON headquarters into various levels of data products. Flights are planned to cover each NEON site once, timed to capture 90% or higher peak greenness, which is pretty complicated when distance and weather are taken into account. Pilots and techs are on the road and in the air from March through October collecting these data. Data is processed at headquarters.

In the afternoon session, we got through a fairly immersive dunk into Jupyter notebooks for exploring hyperspectral imagery in HDF5 format. We did exploration, band stacking, widgets, and vegetation indices. We closed with a fast discussion about TGF (The Git Flow): the way to store, share, control versions of your data and code to ensure reproducibility. We forked, cloned, committed, pushed, and pulled. Not much more to write about, but the whole day was awesome!

Fun additional take-home messages:

- NEON is amazing. I should build some class labs around NEON data, and NEON classroom training materials are available: http://www.neonscience.org/resources/data-tutorials

- Making participants do organized homework is necessary for complicated workshop content: http://neondataskills.org/workshop-event/NEON-Data-Insitute-2017

- HDF5 as an possible alternative data format for Lidar - holding both discrete and waveform

- NEON imagery data is FEDExed daily to headquarters after collected

- I am a crap python coder

- #whofallsbehindstaysbehind

- Tabs are my friend

Thanks to everyone today, including: Megan Jones (Main leader), Nathan Leisso (AOP), Bill Gallery (RGB camera), Ted Haberman (HDF5 format), David Hulslander (AOP), Claire Lunch (Data), Cove Sturtevant (Towers), Tristan Goulden (Hyperspectral), Bridget Hass (HDF5), Paul Gader, Naupaka Zimmerman (GitHub flow).

Hi all,

Just trying to get my head around some of the new big raster processors out there, in addition of course to Google Earth Engine. Bear with me (bare?) while I sort through these. Thanks for raster sleuth Stefania Di Tomasso for the leg work.

1. Geotrellis (https://geotrellis.io/)

Geotrellis is a Scala-based raster processing engine, and it is one of the first geospatial libraries on Spark. Geotrellis is able to process big datasets. Users can interact with geospatial data and see results in real time in an interactive web application (for regional, statewide dataset). For larger raster datasets (eg. US NED). GeoTrellis performs fast batch processing using Akka clustering to distribute data across the cluster. GeoTrellis was designed to solve three core problems, with a focus on raster processing:

- Creating scalable, high performance geoprocessing web services;

- Creating distributed geoprocessing services that can act on large data sets; and

- Parallelizing geoprocessing operations to take full advantage of multi-core architecture.

Features:

- GeoTrellis is designed to help a developer create simple, standard REST services that return the results of geoprocessing models.

- GeoTrellis will automatically parallelize and optimize your geoprocessing models where possible.

- In the spirit of the object-functional style of Scala, it is easy to both create new operations and compose new operations with existing operations.

2. GeoPySpark - in synthesis GeoTrellis for Python community

Geopyspark provides python bindings for working with geospatial data on PySpark (PySpark is the Python API for Spark). Spark is open source processing engine originally developed at UC Berkeley in 2009. GeoPySpark makes Geotrellis (https://geotrellis.io/) accessible to the python community. Scala is a difficult language so they have created this Python library.

3. RasterFoundry

Last week we held another bootcamp on Spatial Data Science. We had three packed days learning about the concepts, tools and workflow associated with spatial databases, analysis and visualizations. Our goal was not to teach a specific suite of tools but rather to teach participants how to develop and refine repeatable and testable workflows for spatial data using common standard programming practices.

On Day 1 we focused on setting up a collaborative virtual data environment through virtual machines, spatial databases (PostgreSQL/PostGIS) with multi-user editing and versioning (GeoGig). We also talked about open data and open standards, and moderndata formats and tools (GeoJSON, GDAL). On Day 2 we focused on open analytical tools for spatial data. We focused on Python (i.e. PySAL, NumPy, PyCharm, iPython Notebook), and R tools. Day 3 was dedicated to the web stack, and visualization via ESRI Online, CartoDB, and Leaflet. Web mapping is great, and as OpenGeo.org says: “Internet maps appear magical: portals into infinitely large, infinitely deep pools of data. But they aren't magical, they are built of a few standard pieces of technology, and the pieces can be re-arranged and sourced from different places.…Anyone can build an internet map."

All-in-all it was a great time spent with a collection of very interesting mapping professionals from around the country. Thanks to everyone!

We had a great day today exploring ESRI open tools in the GIF. We had a full class of 30 participants, and two great ESRI instructors (leaders? evangelists?) John Garvois and Allan Laframboise, and we worked through a range of great online mapping (data, design, analysis, and 3D) examples in the morning, and focused on using ESRI Leaflet API in the afternoon. Here are some of the key resources out there.

- Main ESRI Open Information: http://www.esri.com/software/open

- Slide deck from today: http://slides.com/alaframboise/geodev-hackerlabs#/

- Afternoon example using Leaflet: http://esri.github.io/esri-leaflet/

- ESRI's developer toolkits: https://developers.arcgis.com/en/, including

- ESRI's javascript API: https://developers.arcgis.com/javascript/beta/

Great Stuff! Thanks Allan and John