At UC Berkeley and at UC ANR, my outreach program involves the creation, integration, and application of research-based technical knowledge for the benefit of the public, policy-makers, and land managers. My work focuses on environmental management, vegetation change, vegetation monitoring, and climate change. Critical to my work is the ANR Statewide Program in Informatics and GIS (IGIS), which I began in 2012 and is now really cranking with our crack team of IGIS people. We developed the IGIS program in 2012 to provide research technology and data support for ANR’s mission objectives through the analysis and visualization of spatial data. We use state-of-the-art web, database and mapping technology to provide acquisition, storage, and dissemination of large data sets critical to the ANR mission. We develop and delivers training on research technologies related to important agricultural and natural resource issues statewide. We facilitate networking and collaboration across ANR and UC on issues related to research technology and data. And we deliver research support through a service center for project level work that has Division-wide application. Since I am off on sabbatical, I have decided to take some time to think about my outreach program and how evaluate its impact.

There is a great literature about the history of extension since its 1914 beginnings, and specifically about how extension programs around the nation have been measuring impact. Extension has explored a variety of ways to measure the value of engagement for the public good (Franz 2011, 2014). Early attempts to measure performance focused on activity and reach: the number of individuals served and the quality of the interaction with those individuals. Through time, extension began to turn their attention to program outcomes. Recently, we’ve been focusing on articulating the Public Values of extension, via Condition Change metrics (Rennekamp and Engle 2008). One popular evaluation method has been the Logic Model, used by extension educators to evaluate the effectiveness of a program through the development of a clear workflow or plan that links program outcomes or impacts with outputs, activities and inputs. We’ve developed a fair number of these models for the Sierra Nevada Adaptive Management Program (SNAMP) for example. Impacts include measures of changes in learning, behavior, or condition change across engagement efforts. Recently, change in policy became an additional measure to evaluate impact. I also think measuring reach is needed, and possible.

So, just to throw it out there, here is my master table of impact that I try to use for measuring and evaluating impact of my outreach program, and I’d be interested to hear what you all think of it.

- Change in reach: Geographic scope, Location of events, Number of users, etc.

- Change in activity: Usage, Engagement with a technology, New users, Sessions, Average session duration

- Change in learning; Participants have learned something new from delivered content

- Change in action, behavior, method; New efficiencies, Streamlined protocols, Adoption of new data, Adoption of best practices

- Change in policy; Evidence of contributions to local, state, or federal regulations

- Change in outcome: measured conditions have improved = condition change

I recently used this framework to help me think about impact of the IGIS program, and I share some results here.

Measuring Reach. The IGIS program has developed and delivered workshops throughout California, through the leadership of Sean Hogan, Shane Feirer, and Andy Lyons (http://igis.ucanr.edu/IGISTraining). We manage and track all this activity through a custom data tracking dashboard that IGIS developed (using Google Sheets as a database linked to ArcGIS online to render maps - very cool), and thus can provide key metrics about our reach throughout California. Together, we have delivered 52 workshops across California since July 2015 and reached nearly 800 people. These include workshops on GIS for Forestry, GIS for Agriculture, Drone Technology, WebGIS, Mobile Data Collection, and other topics. This is an impressive record of reach: these workshops have served audiences throughout California. We have delivered workshops from Humboldt to the Imperial Valley, and the attendees (n=766) have come from all over California. Check this map out:

Measuring Impact. At each workshop, we provide a feedback mechanism via an evaluation form and use this input to understand client satisfaction, reported changes in learning, and reported changes in participant workflow. We’ve been doing this for years, but I now think the questions we ask on those surveys need to change. We are really capturing the client satisfaction part of the process, and we need to do a better job on the change in learning and change in action parts of the work.

Having done this exercise, I can clearly see that measuring reach and activity are perhaps the easiest things to measure. We have information tools at our fingertips to do this: online web mapping of participant zip-codes, google analytics to track website activity. Measuring the other impacts: change in action, contributions to policy and actual condition changes are tough. I think extension will continue to struggle with these, but they are critical to help us articulate our value to the public. More work to do!

References

Franz, Nancy K. 2011. “Advancing the Public Value Movement: Sustaining Extension During Tough Times.” Journal of Extension 49 (2): 2COM2.

———. 2014. “Measuring and Articulating the Value of Community Engagement: Lessons Learned from 100 Years of Cooperative Extension Work.” Journal of Higher Education Outreach and Engagement 18 (2): 5.

Rennekamp, Roger A., and Molly Engle. 2008. “A Case Study in Organizational Change: Evaluation in Cooperative Extension.” New Directions for Evaluation 2008 (120): 15–26.

UC ANR's IGIS program hosted 36 drone enthusiasts for a three day DroneCamp in Davis California. DroneCamp was designed for participants with little to no experience in drone technology, but who are interested in using drones for a variety of real world mapping applications. The goals of DroneCamp were to:

- Gain an broader understanding of the drone mapping workflow: including

- Goal setting, mission planning, data collection, data analysis, and communication & visualization

- Learn about the different types of UAV platforms and sensors, and match them to specific mission objectives;

- Get hands-on experience with flight operations, data processing, and data analysis; and

- Network with other drone-enthusiasts and build the California drone ecosystem.

The IGIS crew, including Sean Hogan, Andy Lyons, Maggi Kelly, Robert Johnson, Kelly Easterday, and Shane Feirer were on hand to help run the show. We also had three corporate sponsors: GreenValley Intl, Esri, and Pix4D. Each of these companies had a rep on hand to give presentations and interact with the participants.

Day 1 of #DroneCamp2017 covered some of the basics - why drone are an increasingly important part of our mapping and field equipment portfolio; different platforms and sensors (and there are so many!); software options; and examples. Brandon Stark gave a great overview of the Univ of California UAV Center of Excellence and regulations, and Andy Lyons got us all ready to take the 107 license test. We hope everyone here gets their license! We closed with an interactive panel of experienced drone users (Kelly Easterday, Jacob Flanagan, Brandon Stark, and Sean Hogan) who shared experiences planning missions, flying and traveling with drones, and project results. A quick evaluation of the day showed the the vast majority of people had learned something specific that they could use at work, which is great. Plus we had a cool flight simulator station for people to practice flying (and crashing).

Day 2 was a field day - we spent most of the day at the Davis hobbycraft airfield where we practiced taking off, landing, mission planning, and emergency maneuvers. We had an excellent lunch provided by the Street Cravings food truck. What a day! It was hot hot hot, but there was lots of shade, and a nice breeze. Anyway, we had a great day, with everyone getting their hands on the commands. Our Esri rep Mark Romero gave us a demo on Esri's Drone2Map software, and some of the lidar functionality in ArcGIS Pro.

Day 3 focused on data analysis. We had three workshops ready for the group to chose from, from forestry, agriculture, and rangelands. Prior to the workshops we had great talks from Jacob Flanagan and GreenValley Intl, and Ali Pourreza from Kearney Research and Extension Center. Ali is developing a drone-imagery-based database of the individual trees and vines at Kearney - he calls it the "Virtual Orchard". Jacob talked about the overall mission of GVI and how the company is moving into more comprehensive field and drone-based lidar mapping and software. Angad Singh from Pix4D gave us a master class in mapping from drones, covering georeferencing, the Pix4D workflow, and some of the checks produced for you a the end of processing.

One of our key goals of the DroneCamp was to jump start our California Drone Ecosystem concept. I talk about this in my CalAg Editorial. We are still in the early days of this emerging field, and we can learn a lot from each other as we develop best practices for workflows, platforms and sensors, software, outreach, etc. Our research and decision-making teams have become larger, more distributed, and multi-disciplinary; with experts and citizens working together, and these kinds of collaboratives are increasingly important. We need to collaborate on data collection, storage, & sharing; innovation, analysis, and solutions. If any of you out there want to join us in our California drone ecosystem, drop me a line.

Thanks to ANR for hosting us, thanks to the wonderful participants, and thanks especially to our sponsors (GreenValley Intl, Esri, and Pix4D). Specifically, thanks for:

- Mark Romero and Esri for showing us Drone2Map, and the ArcGIS Image repository and tools, and the trial licenses for ArcGIS;

- Angad Singh from Pix4D for explaining Pix4D, for providing licenses to the group; and

- Jacob Flanagan from GreenValley Intl for your insights into lidar collection and processing, and for all your help showcasing your amazing drones.

#KeepCalmAndDroneOn!

First of all, Pearl Street Mall is just as lovely as I remember, but OMG it is so crowded, with so many new stores and chains. Still, good food, good views, hot weather, lovely walk.

Welcome to Day 2! http://neondataskills.org/data-institute-17/day2/

Our morning session focused on reproducibility and workflows with the great Naupaka Zimmerman. Remember the characteristics of reproducibility - organization, automation, documentation, and dissemination. We focused on organization, and spent an enjoyable hour sorting through an example messy directory of misc data files and code. The directory looked a bit like many of my directories. Lesson learned. We then moved to working with new data and git to reinforce yesterday's lessons. Git was super confusing to me 2 weeks ago, but now I think I love it. We also went back and forth between Jupyter and python stand alone scripts, and abstracted variables, and lo and behold I got my script to run.

The afternoon focused on Lidar (yay!) and prior to coding we talked about discrete and waveform data and collection, and the opentopography (http://www.opentopography.org/) project with Benjamin Gross. The opentopography talk was really interesting. They are not just a data distributor any more, they also provide a HPC framework (mostly TauDEM for now) on their servers at SDSC (http://www.sdsc.edu/). They are going to roll out a user-initiated HPC functionality soon, so stay tuned for their new "pluggable assets" program. This is well worth checking into. We also spent some time live coding with Python with Bridget Hass working with a CHM from the SERC site in California, and had a nerve-wracking code challenge to wrap up the day.

Fun additional take-home messages/resources:

- ISO International standard for dates = YYYY-MM-DD

- Missing values in R = NA, in Python = -9999

- For cleaning messy data - check out OpenRefine - a FOS tool for cleaning messy data http://openrefine.org/

- Excel is cray-cray, best practices for spreadsheets: http://www.datacarpentry.org/spreadsheet-ecology-lesson/

- Morpho (from DataOne) to enter metadata: https://www.dataone.org/software-tools/morpho

- Pay attention to file size with your git repositories - check out: https://git-lfs.github.com/. Git is good for things you do with your hands (like code), not for large data.

- Funny how many food metaphors are used in tech teaching: APIs as a menu in a restaurant; git add vs git commit as a grocery cart before and after purchase; finding GIS data is sometimes like shopping for ingredients in a specialty grocery store (that one is mine)...

- Markdown renderer: http://dillinger.io/

- MIT License, like Creative Commons for code: https://opensource.org/licenses/MIT

- "Jupyter" means it runs with Julia, Python & R, who knew?

- There is a new project called "Feather" that allows compatibility between python and R: https://blog.rstudio.org/2016/03/29/feather/

- All the NEON airborne data can be found here: http://www.neonscience.org/data/airborne-data

- Information on the TIFF specification and TIFF tags here: http://awaresystems.be/, however their TIFF Tag Viewer is only for windows.

Thanks for everyone today! Megan Jones (our fearless leader), Naupaka Zimmerman (Reproducibility), Tristan Goulden (Discrete Lidar), Keith Krause (Waveform Lidar), Benjamin Gross (OpenTopography), Bridget Hass (coding lidar products).

Our home for the week

I left Boulder 20 years ago on a wing and a prayer with a PhD in hand, overwhelmed with bittersweet emotions. I was sad to leave such a beautiful city, nervous about what was to come, but excited to start something new in North Carolina. My future was uncertain, and as I took off from DIA that final time I basically had Tom Petty's Free Fallin' and Learning to Fly on repeat on my walkman. Now I am back, and summer in Boulder is just as breathtaking as I remember it: clear blue skies, the stunning flatirons making a play at outshining the snow-dusted Rockies behind them, and crisp fragrant mountain breezes acting as my Madeleine. I'm back to visit the National Ecological Observatory Network (NEON) headquarters and attend their 2017 Data Institute, and re-invest in my skillset for open reproducible workflows in remote sensing.

Day 1 Wrap Up from the NEON Data Institute 2017

What a day! http://neondataskills.org/data-institute-17/day1/

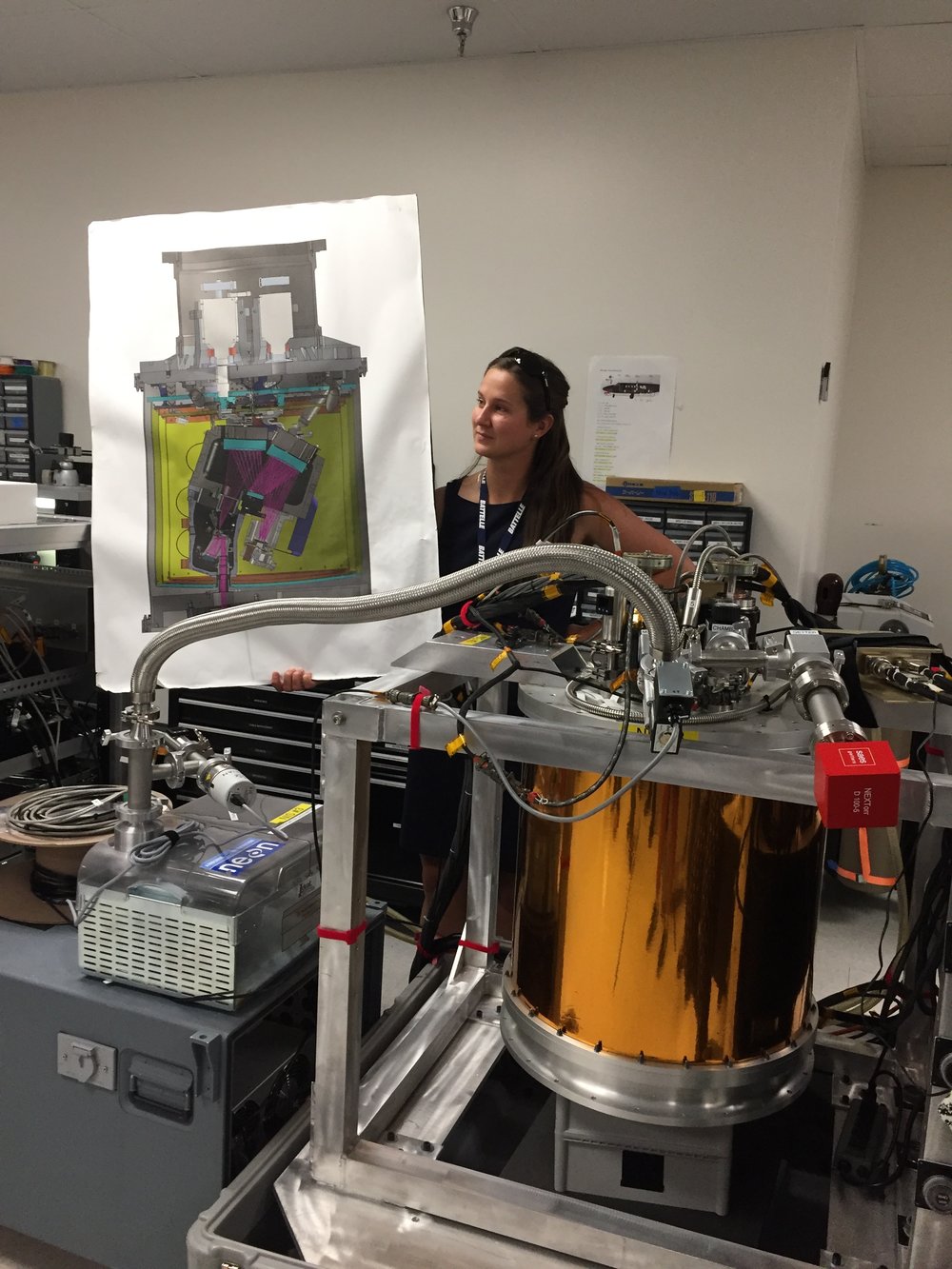

Attendees (about 30) included graduate students, old dogs (new tricks!) like me, and research scientists interested in developing reproducible workflows into their work. We are a mix of ages and genders. The morning session focused on learning about the NEON program (http://www.neonscience.org/): its purpose, sites, sensors, data, and protocols. NEON, funded by NSF and managed by Battelle, was conceived in 2004 and will go online for a 30-year mission providing free and open data on the drivers of and responses to ecological change starting in Jan 2018. NEON data comes from IS (instrumented systems), OS (observation systems), and RS (remote sensing). We focused on the Airborne Observation Platform (AOP) which uses 2, soon to be 3 aircraft, each with a payload of a hyperspectral sensor (from JPL, 426, 5nm bands (380-2510 nm), 1 mRad IFOV, 1 m res at 1000m AGL) and lidar (Optech and soon to be Riegl, discrete and waveform) sensors and a RGB camera (PhaseOne D8900). These sensors produce co-registered raw data, are processed at NEON headquarters into various levels of data products. Flights are planned to cover each NEON site once, timed to capture 90% or higher peak greenness, which is pretty complicated when distance and weather are taken into account. Pilots and techs are on the road and in the air from March through October collecting these data. Data is processed at headquarters.

In the afternoon session, we got through a fairly immersive dunk into Jupyter notebooks for exploring hyperspectral imagery in HDF5 format. We did exploration, band stacking, widgets, and vegetation indices. We closed with a fast discussion about TGF (The Git Flow): the way to store, share, control versions of your data and code to ensure reproducibility. We forked, cloned, committed, pushed, and pulled. Not much more to write about, but the whole day was awesome!

Fun additional take-home messages:

- NEON is amazing. I should build some class labs around NEON data, and NEON classroom training materials are available: http://www.neonscience.org/resources/data-tutorials

- Making participants do organized homework is necessary for complicated workshop content: http://neondataskills.org/workshop-event/NEON-Data-Insitute-2017

- HDF5 as an possible alternative data format for Lidar - holding both discrete and waveform

- NEON imagery data is FEDExed daily to headquarters after collected

- I am a crap python coder

- #whofallsbehindstaysbehind

- Tabs are my friend

Thanks to everyone today, including: Megan Jones (Main leader), Nathan Leisso (AOP), Bill Gallery (RGB camera), Ted Haberman (HDF5 format), David Hulslander (AOP), Claire Lunch (Data), Cove Sturtevant (Towers), Tristan Goulden (Hyperspectral), Bridget Hass (HDF5), Paul Gader, Naupaka Zimmerman (GitHub flow).

Our third GIF Spatial Data Science Bootcamp has wrapped! We had an excellent 3 days with wonderful people from a range of locations and professions and learned about open tools for managing, analyzing and visualizing spatial data. This year's bootcamp was sponsored by IGIS and GreenValley Intl (a Lidar and drone company). GreenValley showcased their new lidar backpack, and we took an excellent shot of the bootcamp participants. What is Paparazzi in lidar-speak? Lidarazzi?

Here is our spin: We live in a world where the importance and availability of spatial data are ever increasing. Today’s marketplace needs trained spatial data analysts who can:

- compile disparate data from multiple sources;

- use easily available and open technology for robust data analysis, sharing, and publication;

- apply core spatial analysis methods;

- and utilize visualization tools to communicate with project managers, the public, and other stakeholders.

At the Spatial Data Science Bootcamp we learn how to integrate modern Spatial Data Science techniques into your workflow through hands-on exercises that leverage today's latest open source and cloud/web-based technologies.